The brand new supercomputing cloud service GreenLake for Giant Language Fashions might be obtainable in late 2023 or early 2024 within the U.S., Hewlett Packard Enterprise introduced at HPE Uncover on Tuesday. GreenLake for LLMs will permit enterprises to coach, tune and deploy large-scale synthetic intelligence that’s personal to every particular person enterprise.

GreenLake for LLMs might be obtainable to European clients following the U.S. launch, with an anticipated launch window in early 2024.

HPE companions with AI software program startup Aleph Alpha

“AI is at an inflection level, and at HPE we’re seeing demand from numerous clients starting to leverage generative AI,” stated Justin Hotard, govt vice chairman and common supervisor for HPC & AI Enterprise Group and Hewlett Packard Labs, in a digital presentation.

Extra must-read AI protection

- ChatGPT cheat sheet: Full information for 2023

- Google Bard cheat sheet: What’s Bard, and how are you going to entry it?

- GPT-4 cheat sheet: What’s GPT-4, and what’s it able to?

- ChatGPT is coming to your job. Why that’s a very good factor

GreenLake for LLMs runs on an AI-native structure spanning tons of or 1000’s of CPUs or GPUs, relying on the workload. This flexibility inside one AI-native structure providing makes it extra environment friendly than general-purpose cloud choices that run a number of workloads in parallel, HPE stated. GreenLake for LLMs was created in partnership with Aleph Alpha, a German AI startup, which offered a pre-trained LLM referred to as Luminous. The Luminous LLM can work in English, French, German, Italian and Spanish and may use textual content and pictures to make predictions.

The collaboration went each methods, with Aleph Alpha utilizing HPE infrastructure to coach Luminous within the first place.

“Through the use of HPE’s supercomputers and AI software program, we effectively and shortly educated Luminous,” stated Jonas Andrulis, founder and CEO of Aleph Alpha, in a press launch. “We’re proud to be a launch accomplice on HPE GreenLake for Giant Language Fashions, and we sit up for increasing our collaboration with HPE to increase Luminous to the cloud and provide it as-a-service to our finish clients to gasoline new purposes for enterprise and analysis initiatives.”

The preliminary launch will embody a set of open-source and proprietary fashions for retraining or fine-tuning. Sooner or later, HPE expects to offer AI specialised for duties associated to local weather modeling, healthcare, finance, manufacturing and transportation.

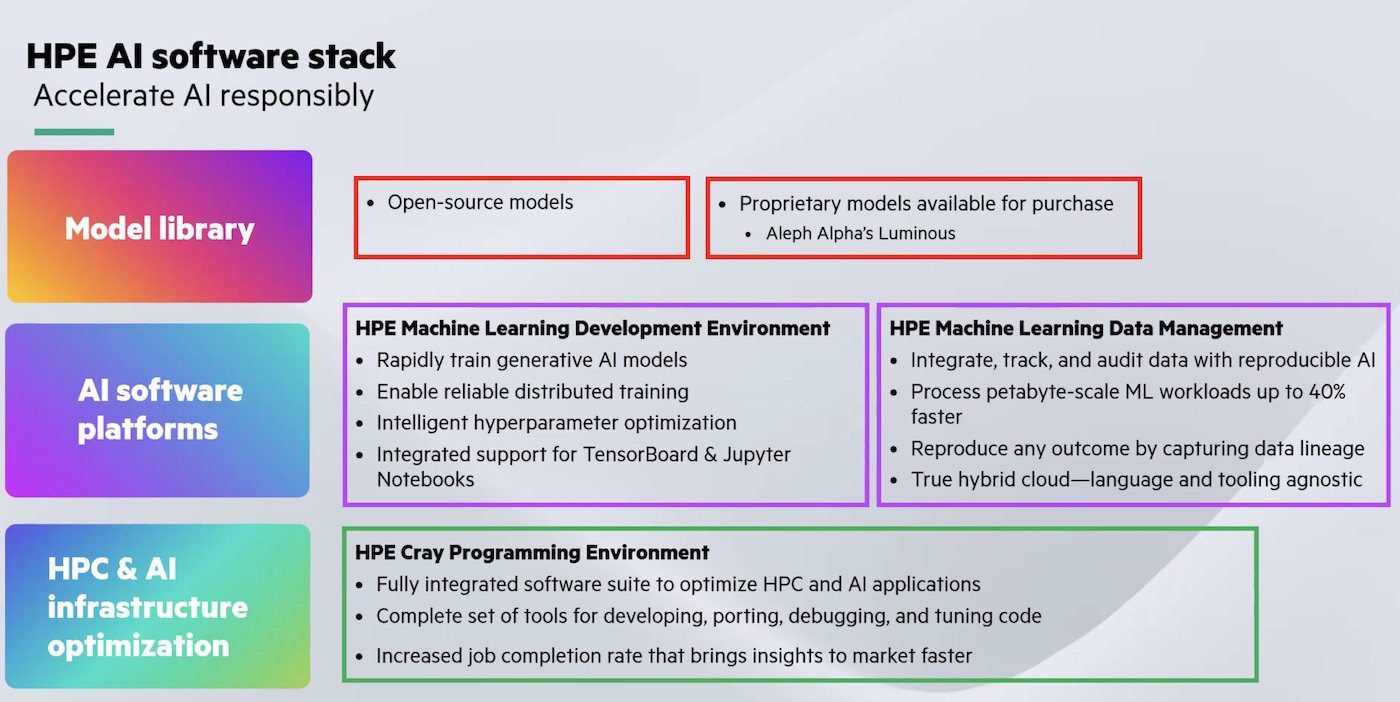

For now, GreenLake for LLMs might be a part of HPE’s total AI software program stack (Determine A), which incorporates the Luminous mannequin, machine studying growth, knowledge administration and growth applications, and the Cray programming surroundings.

Determine A

An illustration of HPE’s AI software program stack.

An illustration of HPE’s AI software program stack.

HPE’s Cray XD supercomputers allow enterprise AI efficiency

GreenLake for LLM runs on HPE’s Cray XD supercomputers and NVIDIA H100 GPUs. The supercomputer and HPE Cray Programming Atmosphere permit builders to do knowledge analytics, pure language duties and different work on high-powered computing and AI purposes with out having to run their very own {hardware}, which may be pricey and require experience particular to supercomputing.

Giant-scale enterprise manufacturing for AI requires large efficiency sources, expert folks, and safety and belief, Hotard identified through the presentation.

SEE: NVIDIA presents AI tenancy on its DGX supercomputer.

Getting extra energy out of renewable vitality

Through the use of a colocation facility, HPE goals to energy its supercomputing with 100% renewable vitality. HPE is working with a computing heart specialist, QScale, in North America on a design constructed particularly for this goal.

“In all of our cloud deployments, the target is to offer a 100% carbon-neutral providing to our clients,” stated Hotard. “One of many advantages of liquid cooling is you’ll be able to really take the wastewater, the heated water, and reuse it. Now we have that in different supercomputer installations, and we’re leveraging that experience on this cloud deployment as effectively.”

Options to HPE GreenLake for LLMs

Different cloud-based providers for operating LLMs embody NVIDIA’s NeMo (which is at the moment in early entry), Amazon Bedrock, and Oracle Cloud Infrastructure.

Hotard famous within the presentation that GreenLake for HPE might be a complement to, not a alternative for, giant cloud providers like AWS and Google Cloud Platform.

“We will and intend to combine with the general public cloud. We see this as a complimentary providing; we don’t see this as a competitor,” he stated.